Versatility in engineering means adaptability and multi-functionality. For robotic automation, it signifies the ability to handle diverse tasks, easily switch between different operations, and thrive in changing environments. The current gap lies in developing agreed-upon frameworks and metrics that are both quantitative and context-appropriate, capturing not just mechanical capabilities but also cognitive adaptability, integration complexity, and economic value.

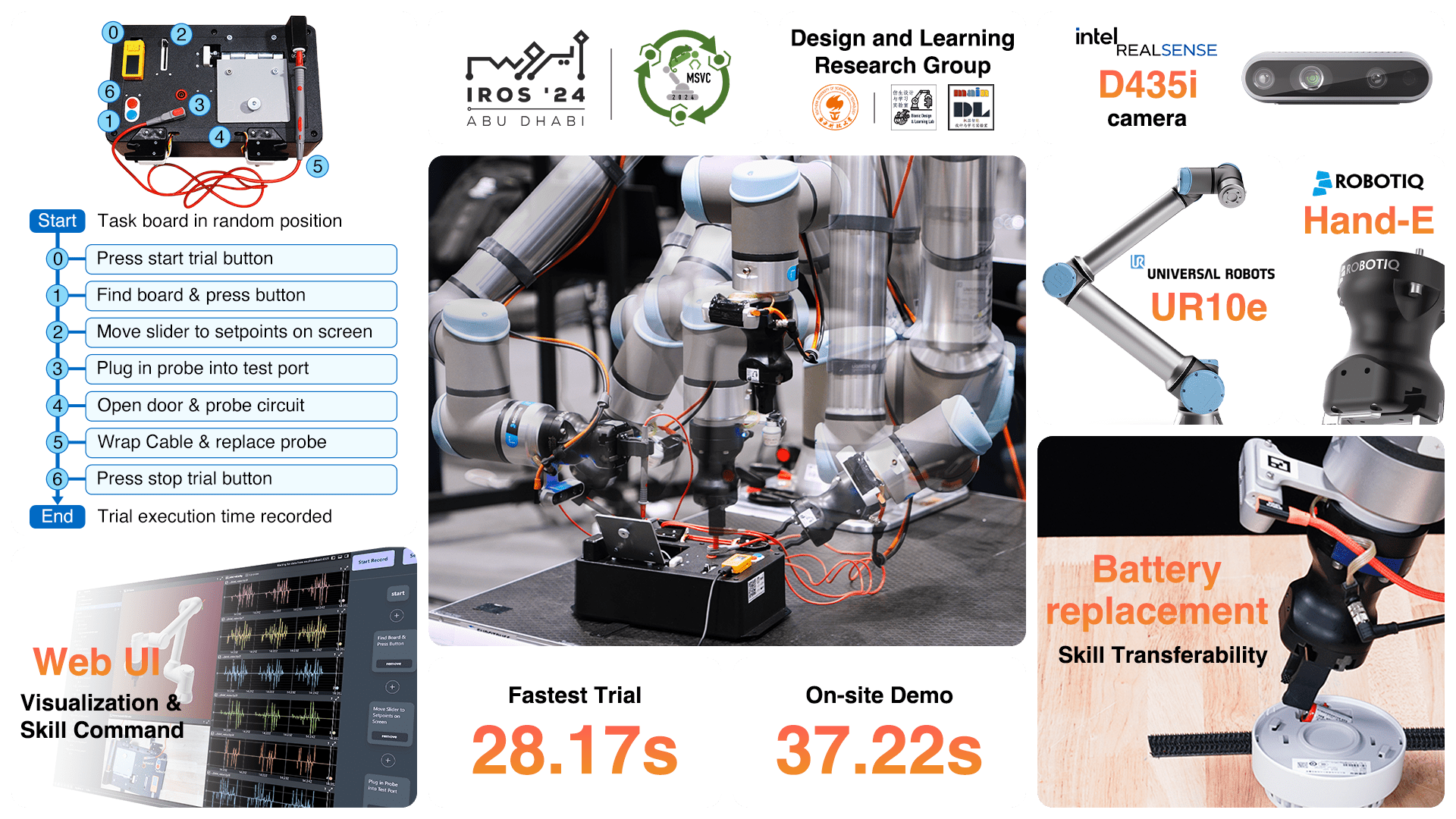

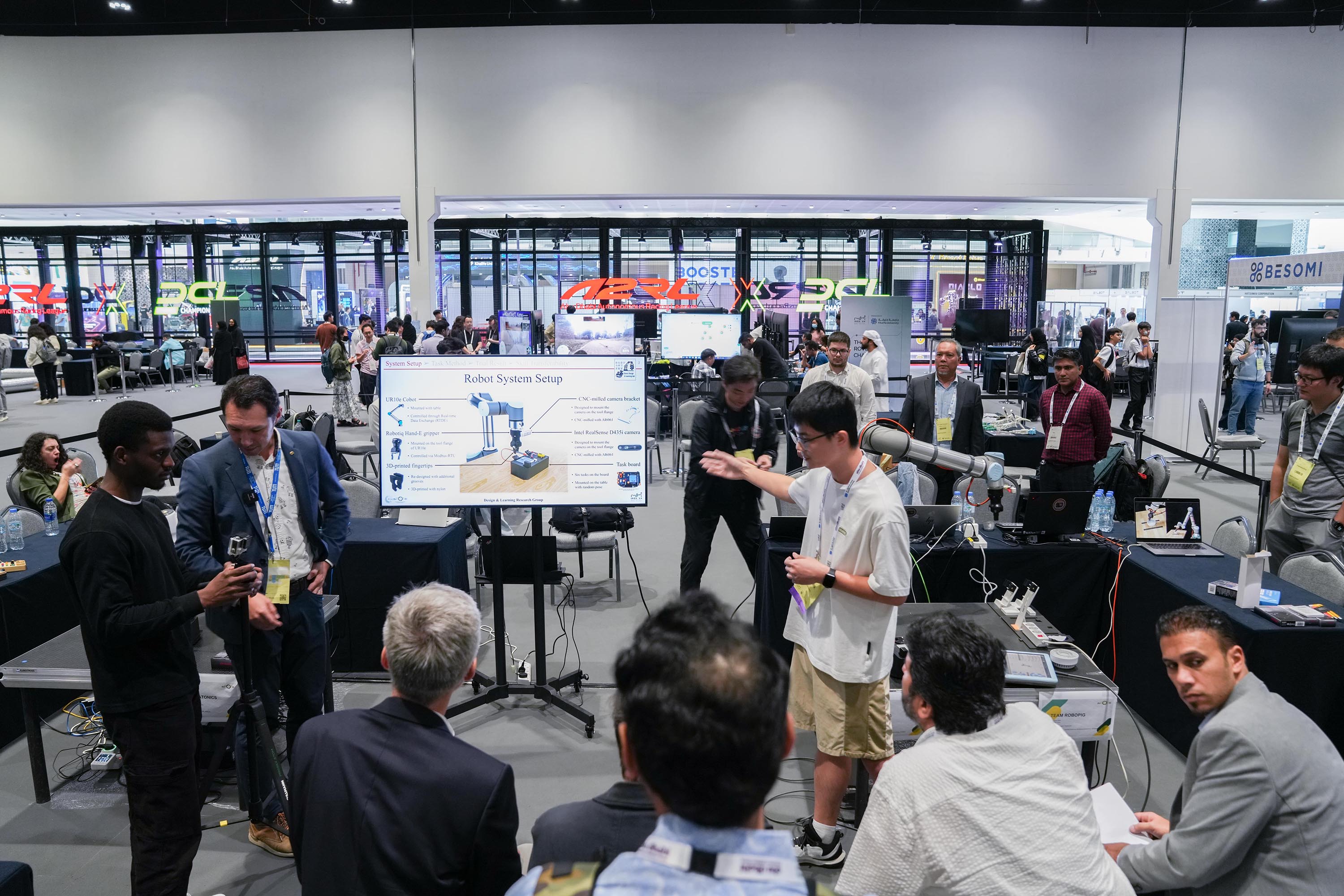

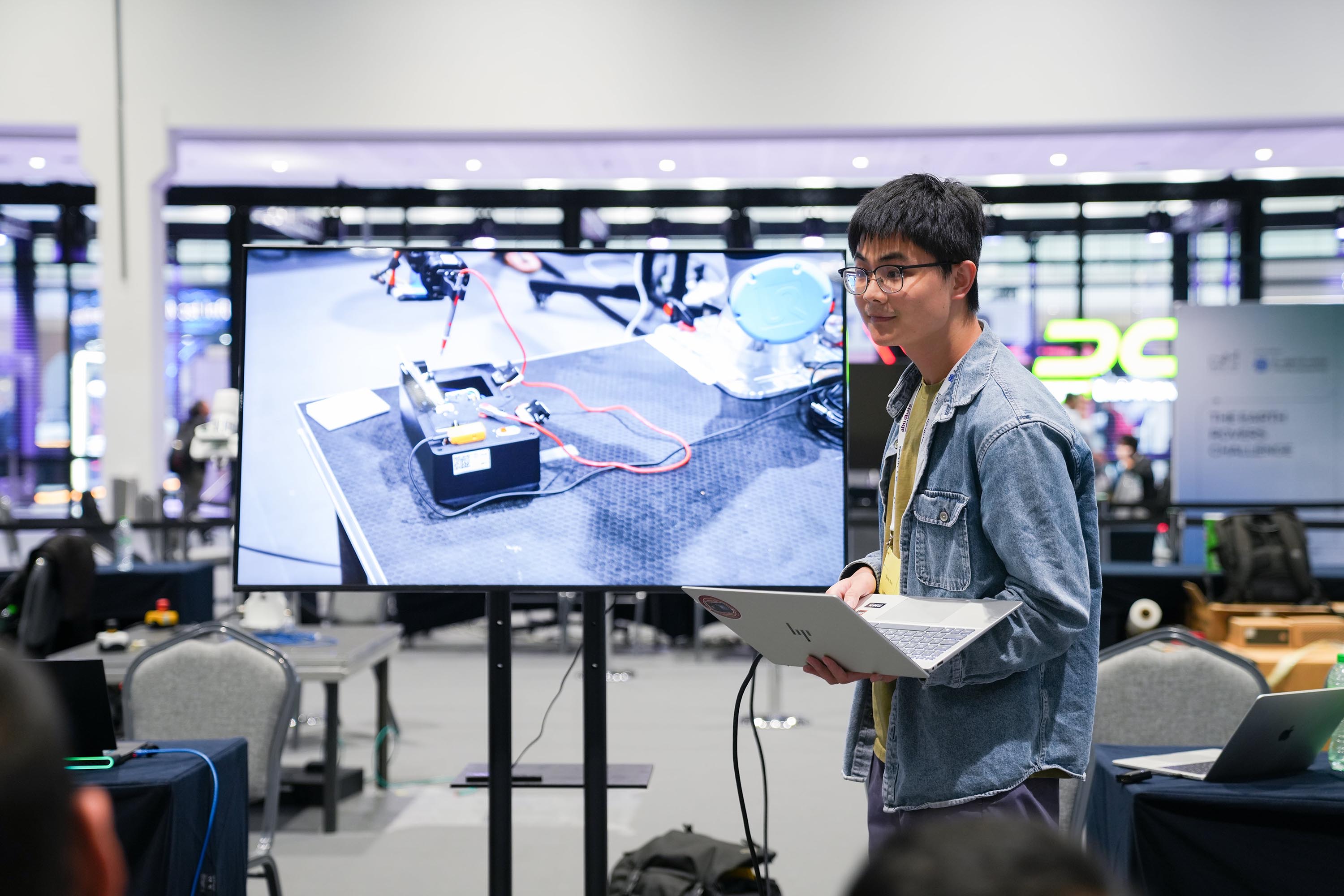

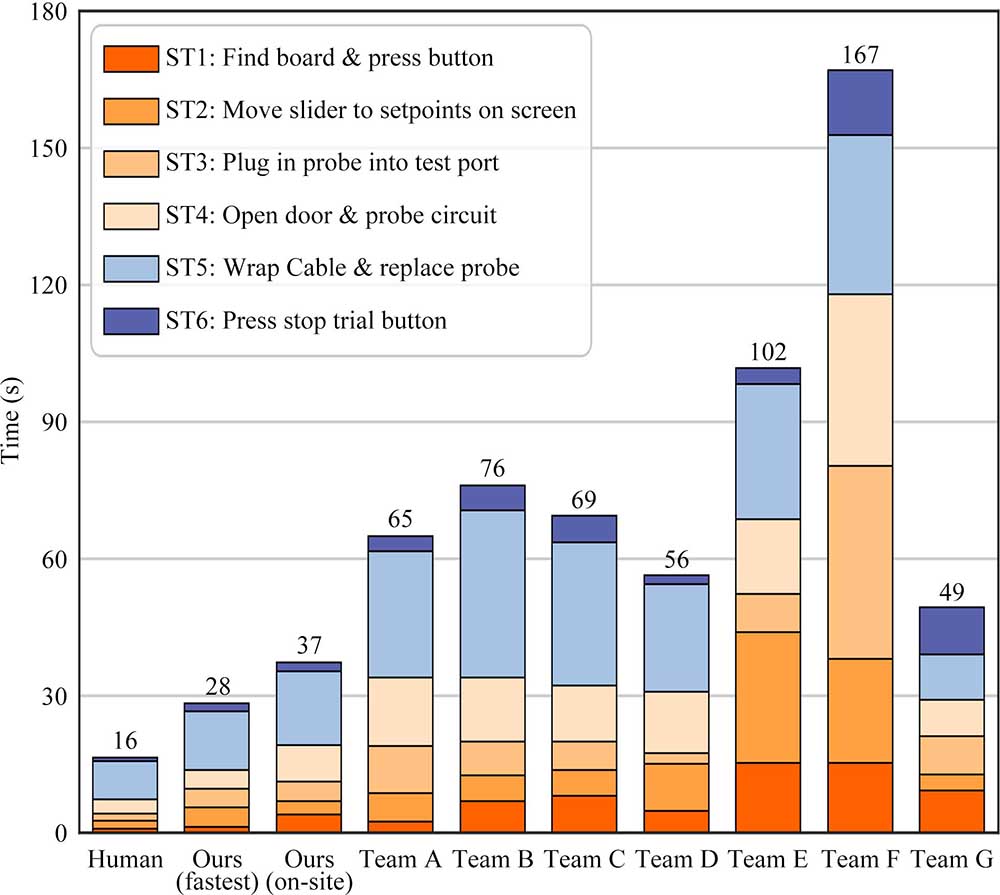

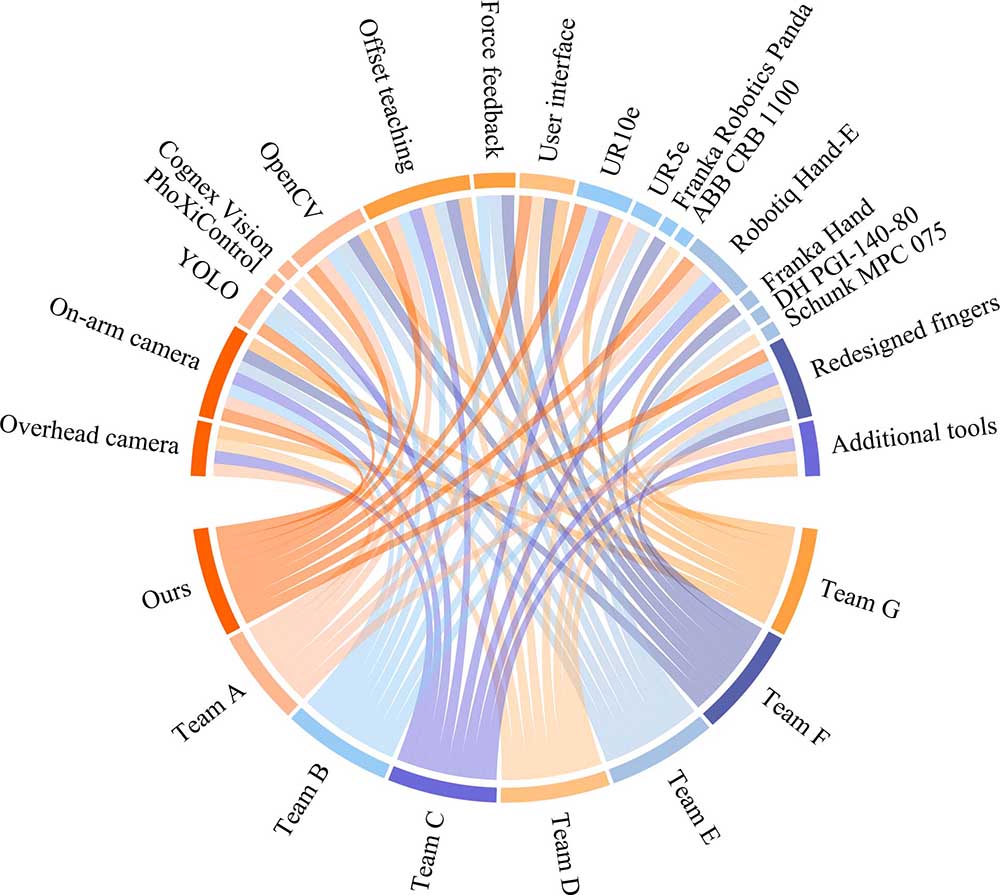

In this paper, we present the Design and Learning Research Group's (DLRG) solution for the euROBIN Manipulation Skill Versatility Challenge (MSVC) at IROS 2024 in Abu Dhabi, UAE. The MSVC, held annually since 2021, is part of the euROBIN project that seeks to advance transferrable robot skills for the circular economy by autonomously performing tasks such as object localization, insertion, door operation, circuit probing, and cable management. We approached the standardized task board provided by event organizers that mimics industrial testing procedures by structurally decomposing the task into subtask skills. We created a custom dashboard with drag-and-drop code blocks to streamline development and adaptation, enabling rapid code refinement and task restructuring, complementing the default remote web platform that records the performance. Our system completed the task board in 28.2 sec in the lab (37.2 sec on-site), nearly tripling the efficiency over the averaged best time of 83.5 sec by previous teams and bringing performance closer to a human baseline of 16.3 sec. By implementing subtasks as reusable code blocks, we facilitated the transfer of these skills to a distinct scenario, successfully removing a battery from a smoke detector with minimal reconfiguration.

We also provide suggestions for future research and industrial practice on robotic versatility in manipulation automation through globalized competitions, interdisciplinary efforts, standardization initiatives, and iterative testing in the real world to ensure that it is measured in a meaningful, actionable way.

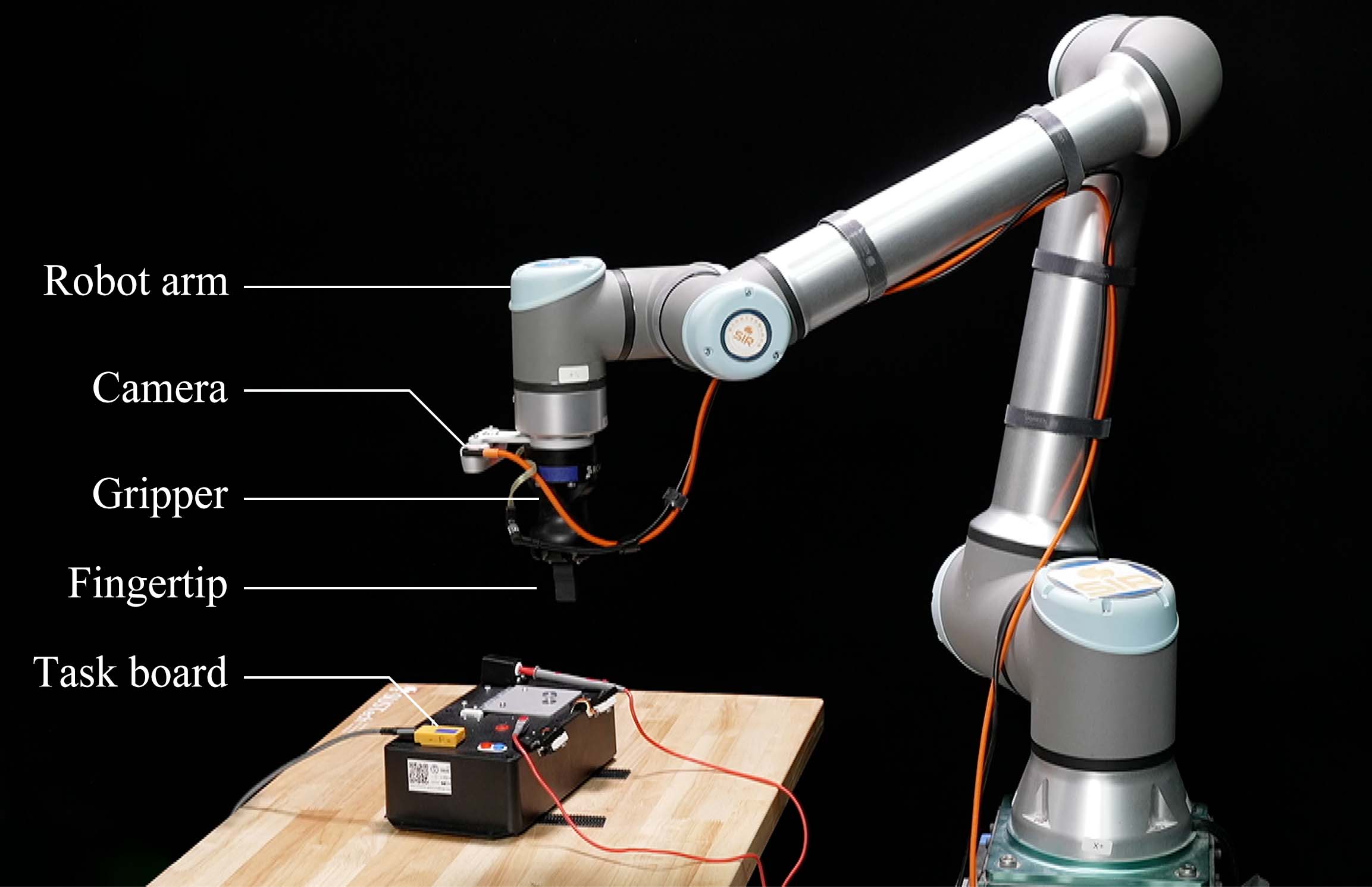

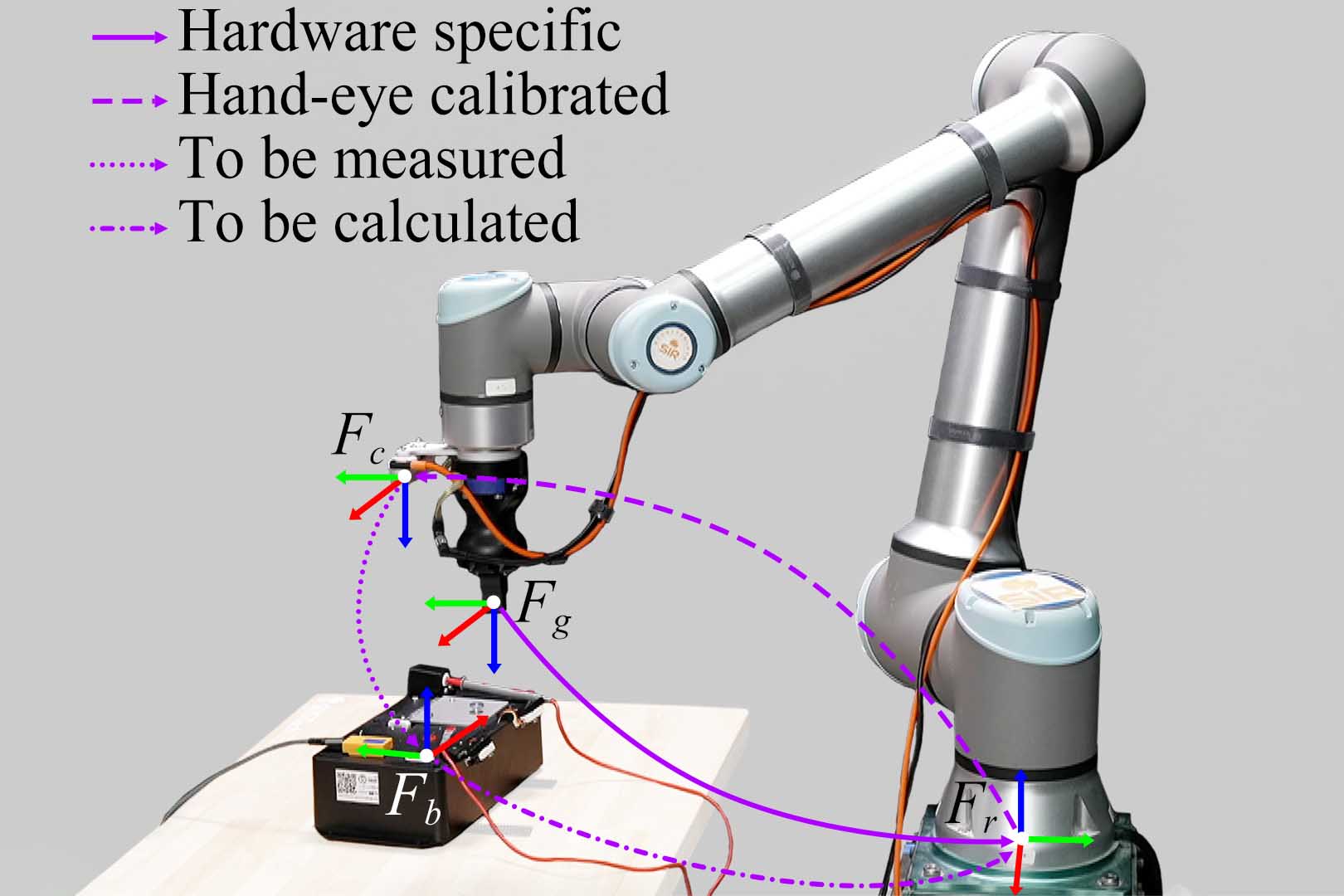

The robot system developed by DLRG for the competition includes a robot arm (UR10e, Universal Robots), a camera (D435, Intel RealSense), a gripper (Hand-E, Robotiq), and a pair of 3D-printed fingertips with minor customization for cable handling, all mounted on the open-sourced DeepClaw station previously developed by the team for a reliable and reconfigurable implementation.

| Equipment | Image | Description |

|---|---|---|

| UR10e Cobot |

|

The UR10e cobot is mounted on the DeepClaw station and controlled through the real-time data exchange (RTDE) interface by the laptop. |

| Robotiq Hand-E gripper |

|

The Hand-E gripper is mounted on the tool flange of the UR10e cobot and controlled via the Modbus RTU RS485 protocol. |

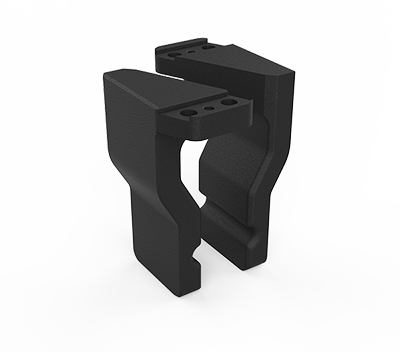

| 3D-printed fingertips |

|

The fingertips are re-designed based on the original fingertips of the Robotiq Hand-E gripper to adapt to the competition tasks by adding grooves. They are fabricated using nylon (PA12, HP) through multi-jet fusion (MJF) |

| Intel Realsense D435i camera |

|

The Realsense D435i RGB-D camera is mounted on the UR10e cobot through a bracket and connected to the laptop through a USB cable. |

| Camera bracket |

|

The camera bracket is designed to mount the Realsense D435i RGB-D camera on the UR10e cobot. It is frabricated by CNC with Al6061. |

| ZHIYUN FIVERAY M20 fill light (Optinal) |

|

The ZHIYUN FIVERAY M20 fill light is mounted on the camera to provide sufficient illumination. It is up to 2010 Lux (0.5m) and able to work for more than 40 minutes without charging. |

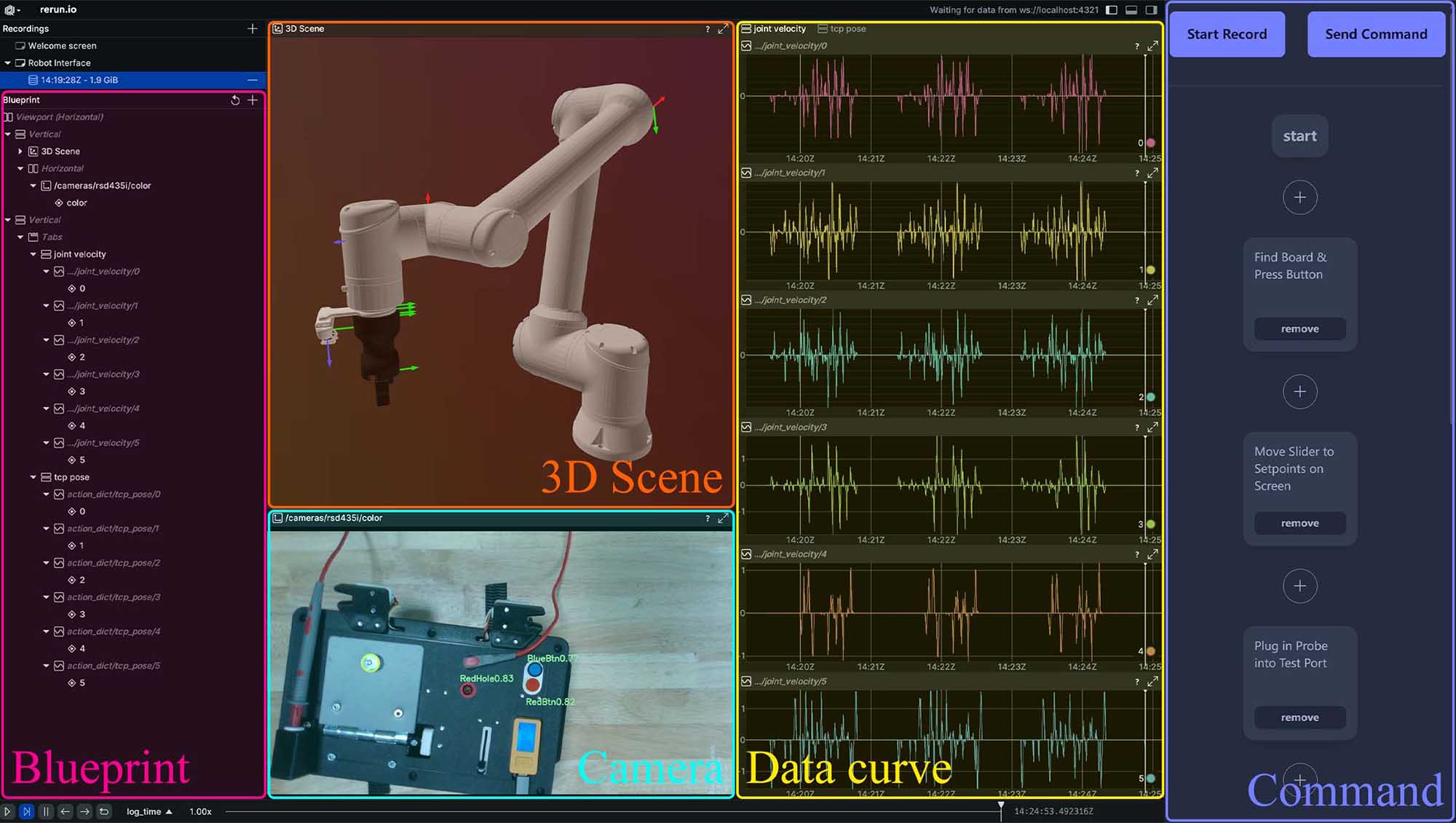

We also designed a web-based user interface. On the left side of the interface was a blueprint panel outlining the robot arm's joints and links, the camera, and the gripper with fingertips. The middle includes a 3D scene of the robotic arm showing its current state in Cartesian space, a camera view of the live video stream taken from the wrist overlayed with key feature recognition status, and a stack of data curve windows showing the robot's angles and velocities in the joint space. The right is a command panel with code blocks for each subtask skill and a drag-and-drop interface to restructure and refine the overall task flow rapidly. We also added an extra record button to start and stop recording robot data for convenient execution and redeployment.

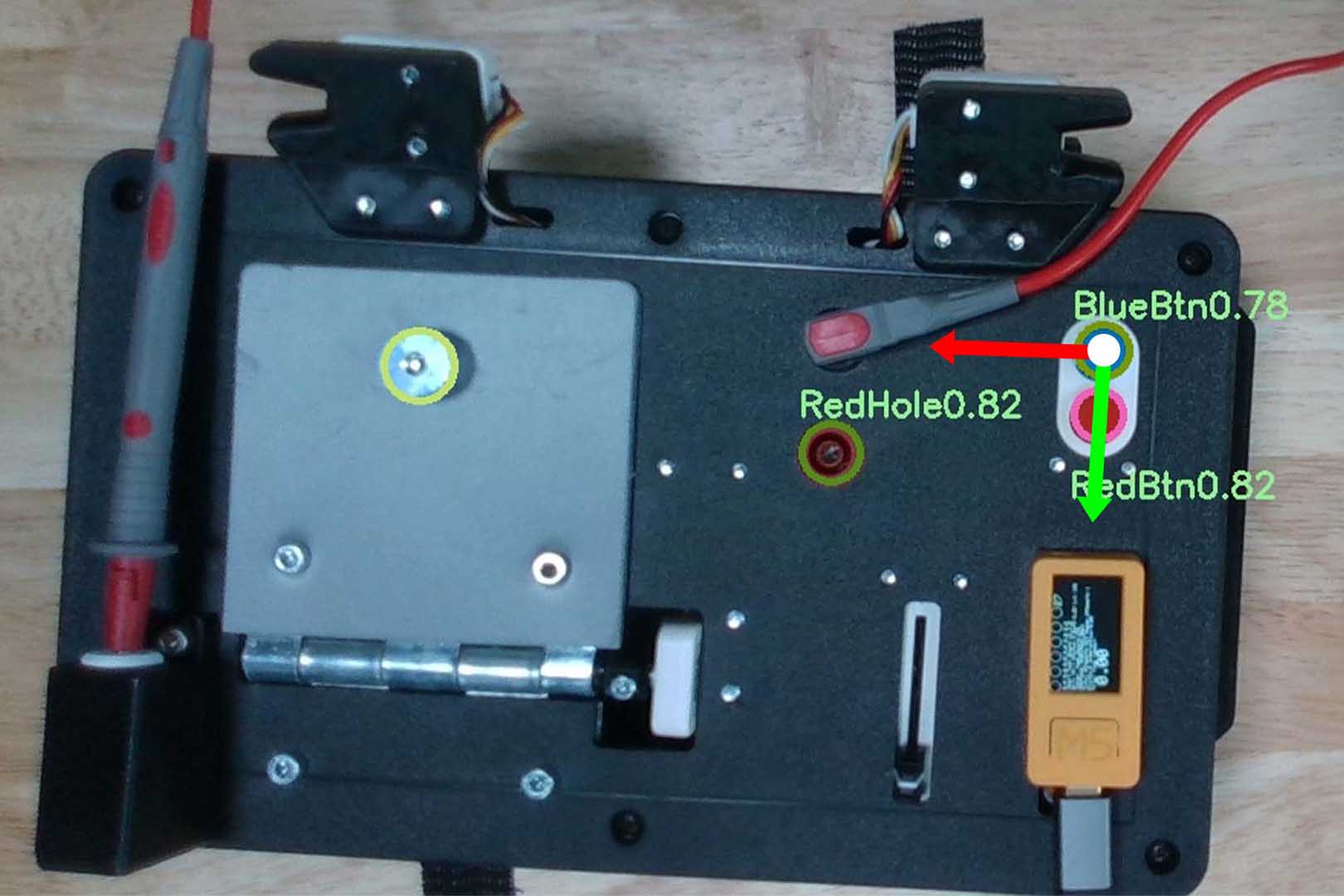

The task board is placed at a random position for each trial. Accurately locating the task board is crucial for successful task execution. A fine-tuned YOLOv8 model was used to detect the red button, the blue button and the red test port. As the positional relationship between these task board elements was known and fixed,the global 6D pose of the board was estimated relative to the robot frame.

To press the blue button, the gripper first moved above it and downward. Then the gripper moved upward, preparing for the next task.

After the gripper approached to and securely gripped the slider, the screen was detected using another fine-tuned YOLOv8 model. The red pointer refered to the current position, the yellow one refered to the first target position, and the green one refered to the second target position. The pixels of the pointers displayed on the screen were extracted based on their colors to calculate the pixel distance error, which was then projected to the actual distance relative to the robot frame, guiding the gripper to move the slider to the target.

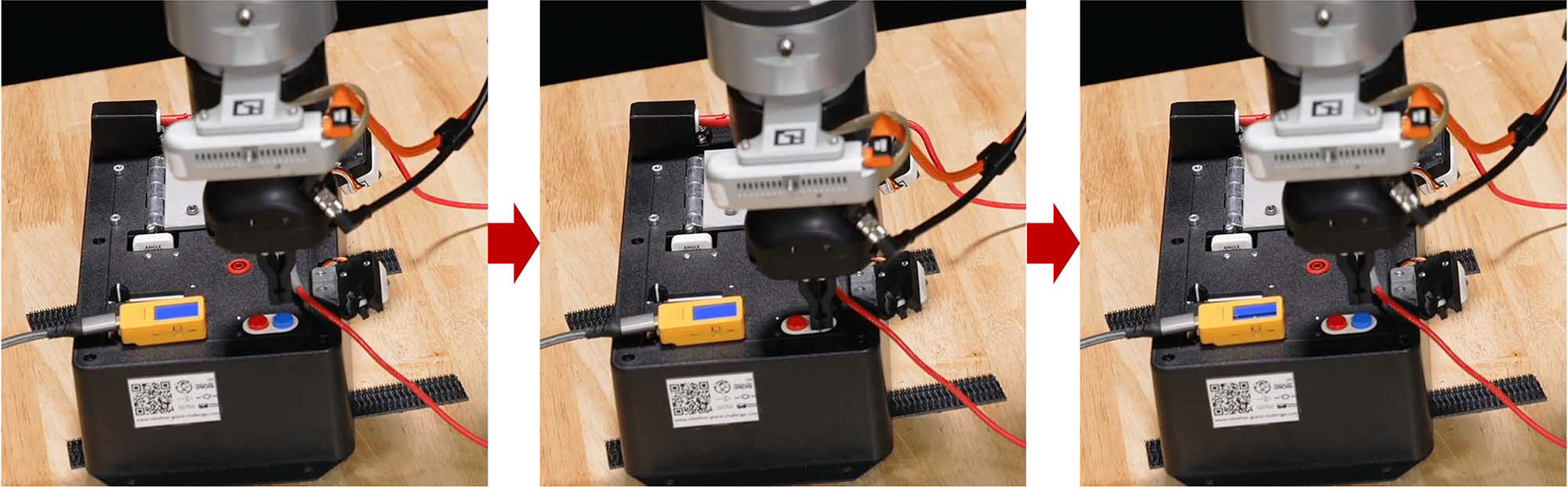

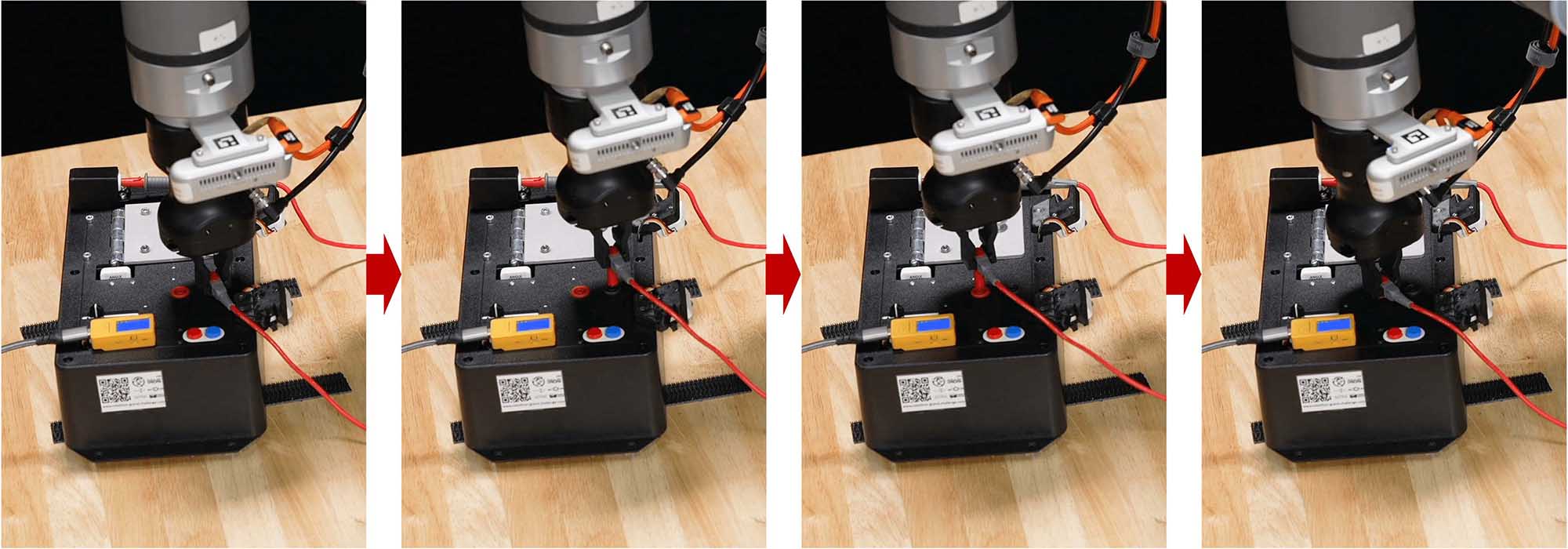

The gripper first moved above the probe and downward to grip it. Then the probe was plugged into the red test port. To increase the determinacy of wrapping the cable in subtask 5, the probe was rotated to a certain angle.

To speed up the task execution, the gripper pulled out the probe and pried up the door with the probe tip, instead of gripping the door. Then the probe was inserted into the testing slot and replaced. The challenge of this subtask is that the pose fo the probe might be changed due to the contact with the task board, but the grooves on the fingertips helped the gripper to securely grasp the probe, increasing the accuracy and robustness of the whole process.

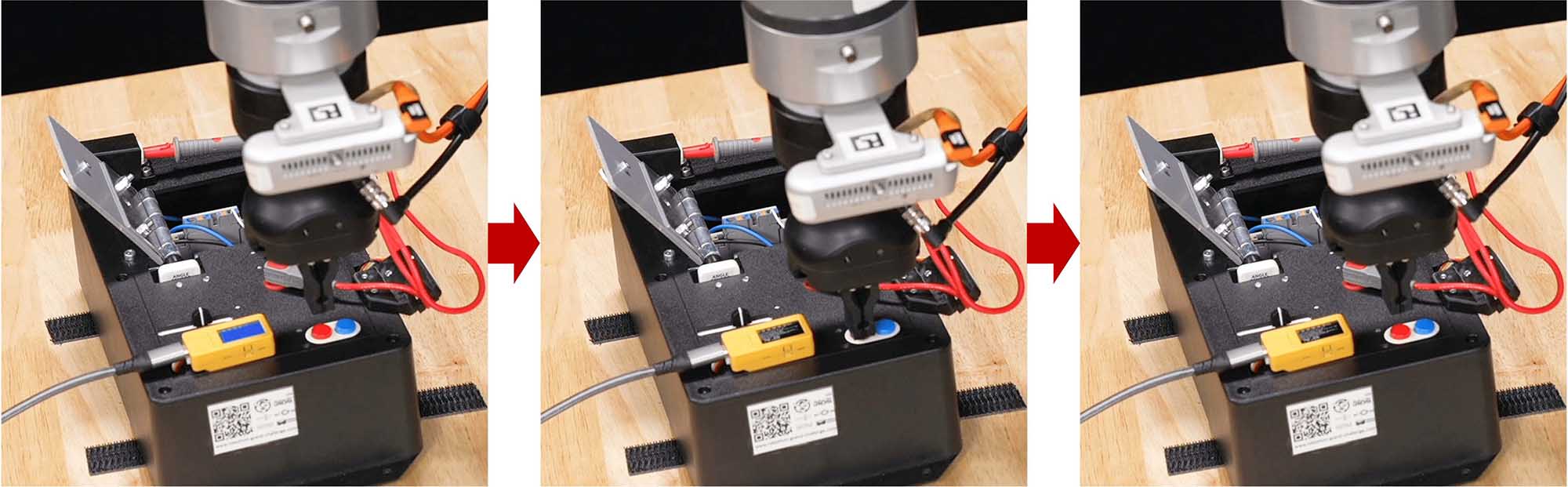

To wrap the cable, we collect the gripper poses from human demonstrated motions, and transform the poses to the task board frame, making it invariant to the absolute position and orientation of the board.

Pressing the stop trial button (red button) is similar as previous pressing the blue button. After the gripper pressed the button, the task board stopped time recording and the trial was finished.

We performed a successful high-speed trial completed at2.5m/s in 28.2 sec, nearly tripling the efficiency over the averaged best time of 83.5 sec by previous teams and bringing performance closer to a human baseline of 16.3 sec.

During the on-site competition at IROS 2024 in Abu Dhabi, our system completed the task board in 37.2 sec, winning the Fastest Automated Task Board Solution Award.

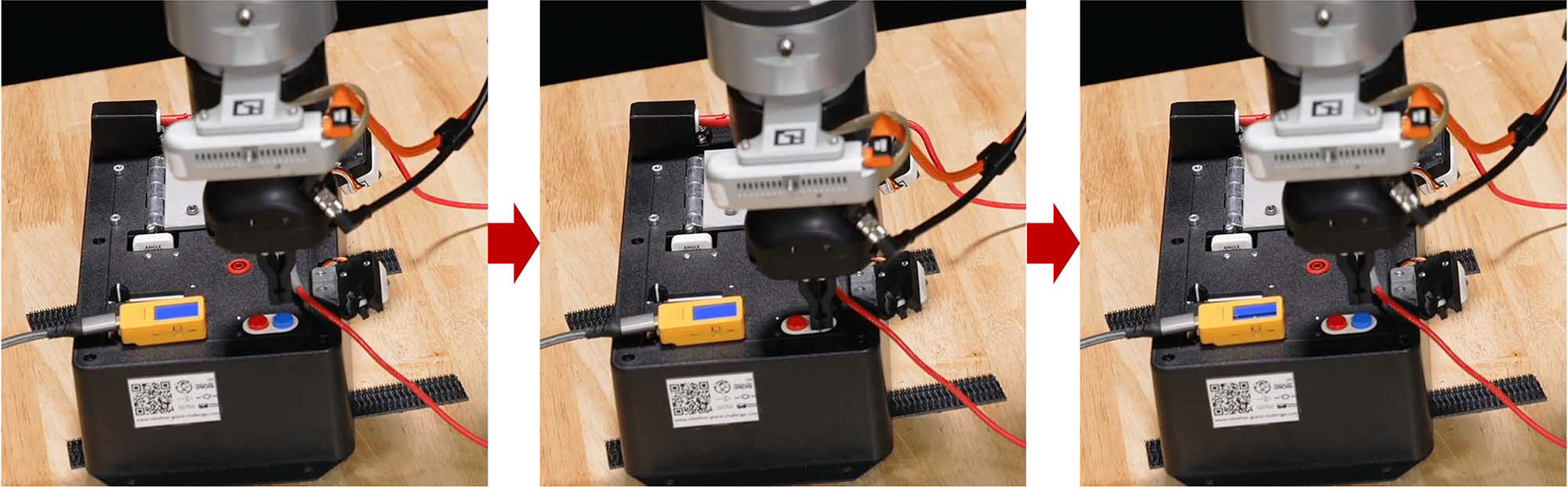

The implemented task board skills, such as grasping, dragging, inserting, and pressing, readily transfer to broader applications, such as replacing a smoke detector's battery, which is part of the competition requirement. We decomposed this new scenario into five subtasks directly mapped from previous skills with adjusted TCP trajectories and gripper angles.

We compared the performance of our system with human and seven other teams (five best teams in 2023, and two teams in 2024). The result indicates that our system had a significant advantage in executing speed compared to other teams in all subtasks, especially ST1, ST4, and ST5, which are close to the human level.

While preparing for the competition, despite the availability of more advanced tools such as soft robotic fingers with omni-directional adaption and state-of-the-art tactile sensing, the team still chose a more structured and industrial solution where the efficiency of task completion is more emphasized over intelligence integration or exploration. The outcomes of such competitions seem to reveal a subtle trend: as tasks become more structured and performance-oriented, there is a tendency for solutions to converge on efficient but relatively inflexible methods. Similar observations can be found in global competitions like the Amazon Picking Challenge. To counter this trend, future competitions or industrial testbeds should introduce greater complexity and variability through diverse objects, dynamic environments, and evolving subtasks to encourage the use of advanced perception, learning algorithms, and adaptive end-effectors. Such challenges will drive the development of intuitive interfaces and autonomous reconfiguration, bridging the gap between human and robot adaptability.